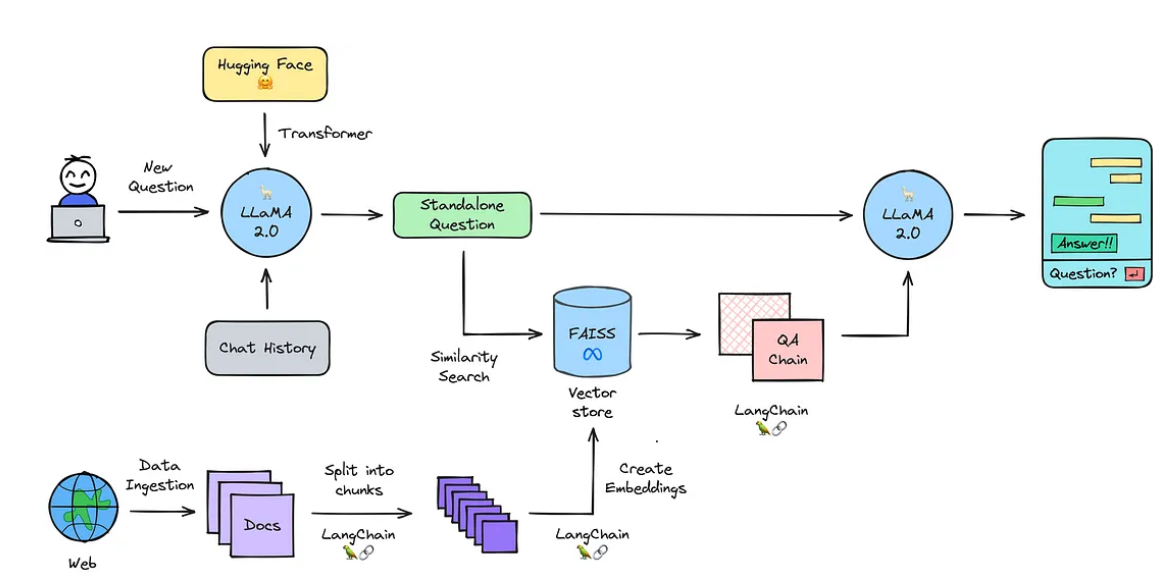

Install the following dependencies and provide the Hugging Face Access Token. Hugging Face models can be run locally through the HuggingFacePipeline class. Llama 2 is here - get it on Hugging Face a blog post about Llama 2 and how to use it with Transformers and PEFT. However this code will allow you to use LangChains advanced agent tooling chains etc with Llama 2. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited. Llama 2 LangChain and HuggingFace Pipelines July 28 2023 1 min read. . We going to set up a language generation pipeline using Hugging Faces transformers library..

. WEB This is a bespoke commercial license that balances open access to the models with responsibility and protections in. With each model download youll receive. WEB Llama 2 The next generation of our open source large language model available for free for research and. Meta is committed to promoting safe and fair use of its tools and features. If on the Llama 2 version release date the monthly active users of the. WEB Llama 2 is being released with a very permissive community license and is available for. If on the Llama 2 version release date the monthly active users of the..

Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters. . . Run and fine-tune Llama 2 in the cloud Chat with Llama 2 70B Customize Llamas personality by clicking the settings button. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters..

All three model sizes are available on HuggingFace for download Llama 2 models download 7B 13B 70B Ollama Run create and share large language models with Ollama. Just grab a quantized model or a fine-tune for Llama-2 TheBloke has several of those as usual That said you could try to modify the. Underpinning all these features is the robust llamacpp thats why you have to download the model in GGUF file format To install and run inference on. Download the Llama 2 Model There are quite a few things to consider when deciding which iteration of Llama 2 you need. Mac users or Windows CPU users..

Comments